The second part in a series on understanding shaders, covering how data gets sent between shaders and your app, how shaders are created and more.

I wrote a post about shaders recently - it was a primer, a "What the heck are shaders?" type of introduction. You should read it if you haven't, as this post is a continuation of the series. This article is a little deeper down the rabbit hole, a bit more technical but also a high level overview of how shaders are generally made, fit together, and communicated with.

As before, this post will reference WebGL/OpenGL specific shaders but this article is by no means specific to OpenGL - the concepts apply to many rendering APIs.

sidenote:

I was overwhelmed by the positive response and continued sharing of the article, and I want you to know that I appreciate it.

brief “second stage” overview

This article will cover the following topics:

- The road from text source code to active GPU program

- Communication between each stage, and to and from your application

How shaders are created

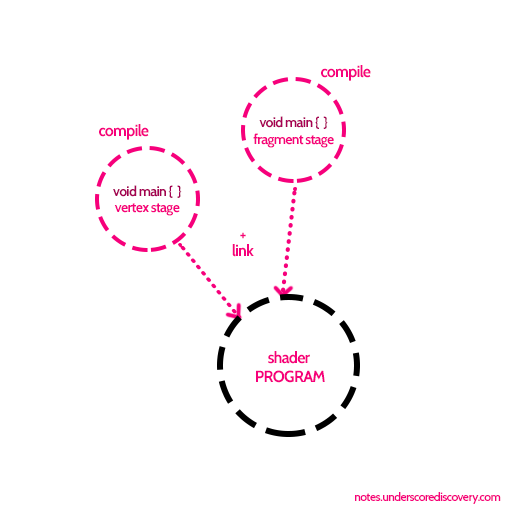

Most rendering APIs share a common pattern when it comes to programming the GPU. The pattern consists of the following :

- Compile a vertex shader from source code

- Compile a fragment shader from source code*

- Link them together, this is your shader program

- Use this program ID to enable the program

*Intentionally keeping it simple, there are other stages etc. This series is for those learning and that is ok.

For a simple example, have a look at how WebGL would do it. I am not going to get TOO specific about it, just show the process in a real world use case.

There are some implied variables here, like vertex_stage_source, and fragment_stage_source are assumed to contain the shader code itself.

1 - Create the stages first

var vertex_stage = gl.createShader(gl.VERTEX_SHADER);

var fragment_stage = gl.createShader(gl.FRAGMENT_SHADER);

2 - Give the source code to each stage

gl.shaderSource(vertex_stage, vertex_stage_source);

gl.shaderSource(fragment_stage, fragment_stage_source);

3 - Compile the shader code, this checks for syntax errors and such.

gl.compileShader(vertex_stage);

gl.compileShader(fragment_stage);

Now we have the stages compiled, we link them together to create a single program that can be used to render with.

//this is your actual program you use to render

var the_shader_program = gl.createProgram();

//It's empty though, so we attach the stages we just compiled

gl.attachShader(the_shader_program, vertex_stage);

gl.attachShader(the_shader_program, fragment_stage);

//Then, link the program. This will also check for errors!

gl.linkProgram(the_shader_program);

Finally, when you are ready to use the program you created, you simply use it :

gl.useProgram(the_shader_program);

Simple complexity

This seems like a lot of code for something so fundamental, and it can be a lot of boilerplate but remember that programming is built around the concept of repeating tasks. Make a function to generate your shader objects and your boilerplate goes away, you only need to do it once. As long as you understand how it fits together, you are in full control of how much boilerplate you have to write.

Pipeline communications

As discussed in part one - the pipeline for a GPU program consists of a number of stages that are executed in order, feeding information from one stage to the next and returning information along the way.

The next most frequent question I come across when dealing with shaders, is how information travels between your application and between the different stages.

The way it works is a little confusing at first, it's very much a black box. This confusion is also amplified by "built in" values that magically exist. It's even more confusing because there are deprecated values that should never be used - in every second article. So when someone shows you "the most basic shader" it's basically 100% unknowns at first.

Aside from these things though, like the rest of the shading pipeline - A lot of it is very simple in concept and likely something you will grasp pretty quickly.

Let's start with the built in values, because these are the easiest.

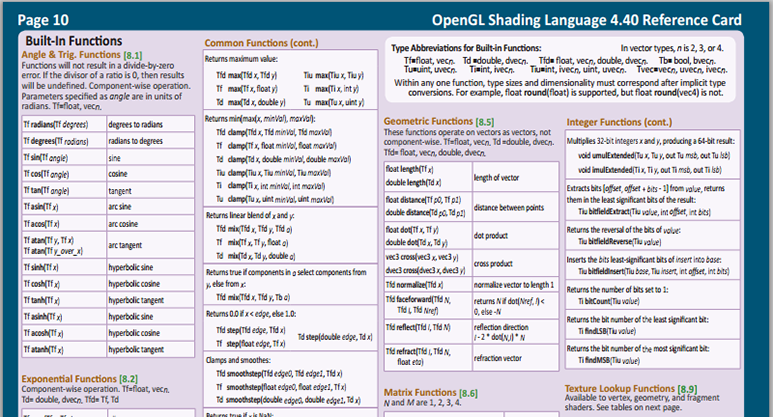

Built in functions

All shader languages have built in language features to compliment programming on the graphics hardware. For example, GLSL has a function called mix, this is a Linear Interpolation function (often called lerp) and is very useful in programming on the GPU. What I mean is that you should look these up. Depending on your platform/shader language, there are many functions that may be new concepts to you, as they don't really occur by default in other disciplines.

Another important note about the built in functions - these functions often are handled by the graphics hardware intrinsically, meaning that they are optimized and streamlined for use. Barring any wild driver bugs or hardware issues, these are often faster than rolling your own code for the functions they offer - so you should familiarize yourself with them before hand writing small maths functions and the like.

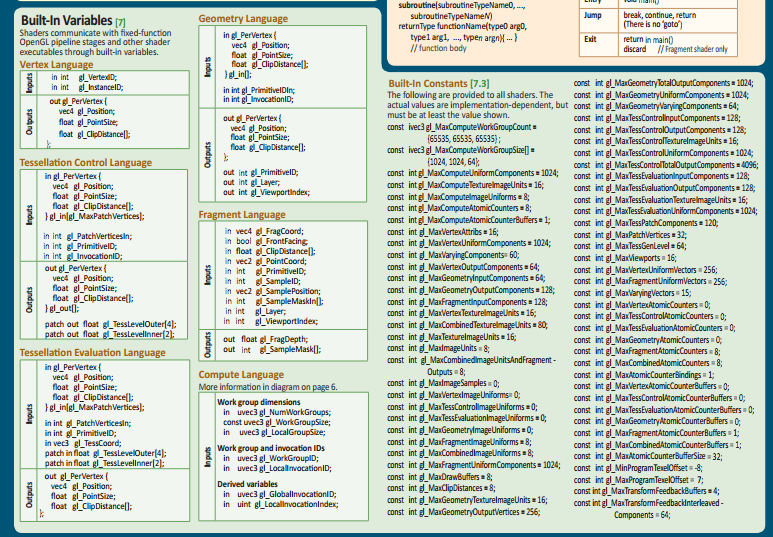

Built in variables

Built in variables are different to the functions, they store values from the state of the program/rendering pipeline, rather than operating on values. A simple example would be when you are creating a pixel shader, gl_FragCoord exists, and contains the window-relative coordinates of the current fragment. As with the function list, they are often documented and there are many to learn, so don't worry if there seem to be a lot. You learn about them and use them only when you need to in practice. Every shader programmer I know remembers a subset by heart and has a reference on hand at all times.

These values are implicit connections between the pipeline and code you write.

staying on track

To avoid the “traps” of deprecated functions, as with any API for any programming language, you just have to read the documentation. It's the same principle as targeting bleeding edge features - you check the status in the API level you want to support, you make sure your requirements are met, and you avoid things that are clearly marked as deprecated for that API level and above. It's irrelevant that they were changed and swapped before that - focus only on what you need, and forget it's history.

Edit: Since posting these, an amazing resource has come up for figuring out the availability and usage of openGL features http://docs.gl/

Most APIs provide really comprehensive "quick reference" sheets, jam packed with every little detail you would need to know, including version, deprecation, and signatures. Below are some examples from the OpenGL 4.4 quick reference card.

Information between stages

Also mentioned in part one, the stages can and do send information to the next stage.

Across different versions of APIs, over a few years, newer better APIs were released that improved drastically over the initial confusing names. This means that, across major versions of APIs, you will come across multiple approaches for the same thing.

Remember : The important thing here is the concepts and principles. The naming/descriptions may be specific but it's simply to ground the concept in an existing API. These concepts apply in other APIs, and are differ only in use, rather than concept.

stage outputs

vertex stage

In OpenGL, the concept of the vertex shader sending information to the fragment shader was named varying. To use it, you would:

- create a named

varyingvariable inside of the vertex shader - create the same named

varyingvariable inside of the fragment shader

This allowed OpenGL to know that you meant "make this value available in the next stage, please". In other shader languages the same concept applies, where explicit connections are created, by you, to signify outputs from the vertex shader.

An implicit connection exists for gl_Position which you return to the pipeline for the vertex position.

In newer OpenGL versions, these were renamed to out:

out vec2 texcoord;

out vec4 vertcolor;

fragment stage

We are already saw that the fragment shader uses gl_FragColor as an output to return the color. This is an implicit connection. In newer GL versions, out is used in place of gl_FragColor:

out vec4 final_color;

It can also be noted that there are other built in variables (like gl_FragColor) that are outputs. These feed back into the pipeline. One example is the depth value, it can be written to from the fragment shader.

stage inputs

Also in OpenGL, you would "reference" the variable value from the previous stage using varying or, in newer APIs, in. This is an explicit connection, as you are architecting the shader.

in vec2 texcoord;

in vec4 vertcolor;

The second type of explicit input connections are between your code and the rendering pipeline. These are set through API functions in the application code, and submit data through them, for use in the shaders.

In OpenGL API, these were named uniform, attribute and sampler among others. attribute is vertex specific, sampler is fragment specific. In newer OpenGL versions these can take on the form of more expressive structures, but for the purpose of concept, we will only look at the principle :

vertex stage

Attributes are handed into the shader from your code, into the first stage :

attribute vec4 vertex_position;

attribute vec2 vertex_tcoord;

attribute vec4 vertex_color;

This stage can forward that information to the fragments, modified, or as is.

The vertex stage can take uniform as well, there is a difference in how attributes work and uniforms work.

fragment stage

uniform vec4 tint_color;

uniform float radius;

uniform vec2 screen_position;

Notice that these variables are whatever I want them to be. I am making explicit connections from my code, like a game, into the shader. The above example could be for a simple lantern effect, lighting up a radius area, with a specific color, at a specific point on screen.

That is application domain information, submitted to the shader, by me.

Another explicit type of connection is a sampler. Images on the graphics card are sampled and can be read inside of the fragment shader. Take note that the value passed in is not the texture ID, it's not the texture pointer, it is the active texture slot. Texturing is usually a state, like use this shader, then use this texture and then draw. The texture slot, allows multiple textures to co-exist, and be used by the shaders.

- bind texture A

- set active slot 0

- bind texture B

- set active slot 1

The texture slot determines what value the shader wants, as it will always use the bound texture, and the given sampler slot!

fundamental shaders

The most basic shaders you will come across simply take information, and use it to present the information as it is. Below, we can look at how "default shaders" would fit together, based on the knowledge we now have.

This will be using WebGL shaders again, for reference only. These concepts are described above, so they should hopefully make sense now.

As you recall - geometry is a set of vertices. Vertices hold (in this example) :

- a color

- a position

- a texture coordinate

This is a vertex, geometry, so these values will go into a vertex attribute and sent to the vertex stage.

The texture itself, is color information. It will be applied in the fragment shader, so we pass the active texture slot we want to use, as a shader uniform.

The other information in the shader below, is for camera transforms, these are sent as uniforms because they are not vertex specific data. They are just data that I want to use to apply a camera.

You can ignore the projection code for now, as this is simply about moving data around from your app, into the shader, between shaders, and back again.

Basic Vertex shader

//vertex specific attributes, for THIS vertex

attribute vec3 vertexPosition;

attribute vec2 vertexTCoord;

attribute vec4 vertexColor;

//generic data = uniforms, the same between each vertex!

//this is why the term uniform is used, it's "fixed" between

//each fragment, and each vertex that it runs across. It's

//uniform across the whole program.

uniform mat4 projectionMatrix;

uniform mat4 modelViewMatrix;

//outputs, these are sent the next stage.

//they vary from vertex to vertex, hence the name.

varying vec2 tcoord;

varying vec4 color;

void main(void) {

//work out the position of the vertex,

//based on its local position, affected by the camera

gl_Position = projectionMatrix *

modelViewMatrix *

vec4(vertexPosition, 1.0);

//make sure the fragment shader is handed the values for this vertex

tcoord = vertexTCoord;

color = vertexColor;

}

Basic fragment shader

If we have no textures, only vertices, like a rectangle that only has a color, this is really simple :

Untextured

//make sure we accept the values we passed from the previous stage

varying vec2 tcoord;

varying vec4 color;

void main() {

//return the color of this fragment based on the vertex

//information that was handed into the varying value!

// in other words, this color can vary per vertex/fragment

gl_FragColor = color;

}

Textured

//from the vertex shader

varying vec2 tcoord;

varying vec4 color;

//sampler == texture slot

//these are named anything, as explained later

uniform sampler2D tex0;

void main() {

//use the texture coordinate from the vertex,

//passed in from the vertex shader,

//and read from the texture sampler,

//what the color would be at this texel

//in the texture map

vec4 texcolor = texture2D(tex0, tcoord);

//crude colorization using modulation,

//use the color of the vertex, and the color

//of the texture to determine the fragment color

gl_FragColor = color * texcolor;

}

Binding data to the inputs

Now that we know how inputs are sent and stored, we can look at how they get connected from your code. This pattern is very similar again, across all major APIs.

finding the location of the inputs

There are two ways :

- Set the attribute name to a specific location OR

- Fetch the attribute/uniform/sampler location by name

This location is a shader program specific value, assigned by the compiler. You have control over the assignments by name, or, by forcing a name to be assigned at a specific location.

Put in simpler terms :

”radius“, I want you to be at location 0.

vs

Compiler, where have you placed “radius”?

If you use the second way, requesting the location, you should cache this value. You can request all the locations once you have linked your program successfully, and reuse them when assigning values to the inputs.

Assigning a value to the inputs

This is often application language specific, but again the principle is universal : The API will offer a means to set a value of an input from code.

vertex attributes

Let's use WebGL as an example again, and let's use a single attribute, for the vertex position, to locate and set the position.

var vertex_pos_loc = gl.getAttribLocation(the_shader_program, "vertexPosition");

Notice the name? I am asking the compiler where it assigned the named variable I declared in the shader. Now we can use that location to give it some array of vertex position data.

First, because we are going to use attribute arrays, we want to enable them. If you read this code as simple terms, it says "enable a vertex attribute array for location, where location refers to "vertexPosition".

gl.enableVertexAttribArray(vertex_pos_loc);

To focus on what we are talking about here, some variables are implied :

//this simply sets the vertex buffer (list of vertices)

//as active, so subsequent commands use this buffer

gl.bindBuffer( gl.ARRAY_BUFFER, rectangle_vertices_buffer );

//and this line points the buffer to the location, or "vertexPosition"

gl.vertexAttribPointer(vertex_pos_loc, 6, gl.FLOAT, false, 0, 0);

There, now we have:

- taken a list of vertex positions, stored them in a vertex buffer

- located the vertexPosition variable location in the shader

- enabled attribute arrays, because we are using arrays

- we set the buffer as active,

- and finally pointed our location to this buffer.

What happens now, is the vertexPosition value in the shader, is associated with the list of vertices from the application code. Details on vertex buffers are well covered online, so we will continue with shader specifics here.

uniform values

As with attributes, we need to know the location.

var radius_loc = gl.getUniformLocation(the_shader_program, "radius");

As this is a simple float value, we use gl.uniform1f. This varies by API in syntax, but the concept is the same across the APIs.

gl.uniform1f(radius_loc, 4.0);

This tells OpenGL that the uniform value for "radius" is 4.0, and we can call this multiple times to update it each render.

Conclusion

As this article was already getting quite long, I will continue further in the next part.

As much of this is still understanding the theory, it can seem like a lot to get around before digging into programming actual shaders, but remember there are many places to have a look at real shaders, and try to understand how they fit together :

Playing around with shaders : recap

Here are some links to some sandbox sites where you can see examples, and create your own shaders with minimal effort directly in your browser.

https://www.shadertoy.com/

http://glsl.heroku.com/

http://www.mrdoob.com/projects/glsl_sandbox/

An important factor here is understanding what your framework is doing to give you access to the shaders,

which allows you to interact with the framework in more powerful ways. Like, drawing a million particles in your browser - passing information through textures, encoding values into color information and vertex attributes.

The delay between post one and two were way too long, as I have been busy, but the next two posts are hot on the heels of this one.

Tentative topics for the next posts :

shaders stage three

- A brief discussion on architectural implications of shaders, or "How do I fit this into a rendering framework" and "How to do more complex materials".

- Understanding and integrating new shaders into existing shader pipelines

- Shader generation tools and their output

shaders stage four

- Deconstructing a shader with a live example

- Constructing a basic shader on your own

- A look at a few frameworks shader approach

- series conclusion

Follow ups

If you would like to suggest a specific topic to cover, or know when the next installment is ready, you can subsribe to this blog (top right of post), or follow me on twitter, as I will tweet about the articles there as I write them. You can find the rest of my contact info on my home page.

I welcome asking questions, sending feedback and suggesting topics.

As before, I hope this helps someone in their journey, and look forward to seeing what you create.