A common theme I run into when talking to some developers is that they wish they could wrap their head around shaders. Shaders always seem to solve a lot of problems, and often are referenced as to the solution to the task at hand.

But just as often they are seen as a sort of enigma or black box - one that is so shrouded in complexity that it makes learning them from ”basic” examples near impossible.

Hopefully, this primer will help those that aren't well versed and help transition into using shaders, where applicable.

What are shaders?

When you draw something on screen, it is generally submitted as some “geometry”. Like, a polygon or a group of triangles. Even drawing a sprite, is drawing some geometry with an image applied.

Geometry is a set of points (vertices) describing the layout which is sent to the graphics card for drawing. A sprite, like a player or a platform is usually a “quad”, and is often sent as two triangles arranged in a rectangle shape.

When you send geometry to the graphics card to be drawn, you can tell the graphics card to use custom shaders that will be applied to the geometry, before it shows up on the render.

There are two kinds of shaders to understand for now - vertex and fragment shaders. You can think of a shader as a small function that is run over each vertex, and every fragment (a fragment is like a pixel) when rendering. If you look at the code for a shader, it would resemble a regular function :

void main() {

//this code runs on each fragment, or vertex.

}

It should be noted as well that the examples below reference OpenGL Shader Language, referred to as GLSL, but the concepts apply to the programmable pipeline in general and are not for any specific rendering API. This information applies to almost any platform or API.

The vertex shader

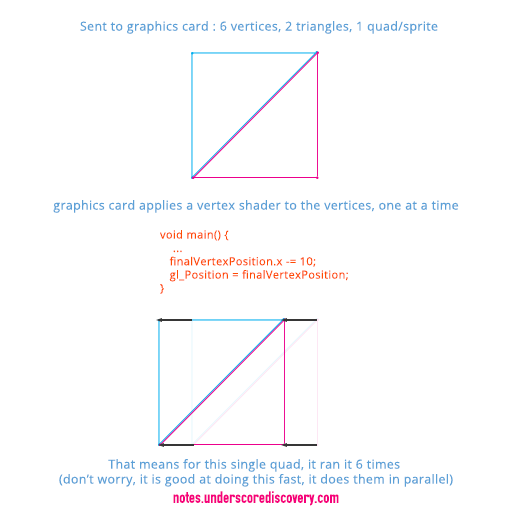

As mentioned, there are vertices sent to the hardware to draw a sprite. Two triangles - and each triangle has 3 vertices, making a total of 6 vertices sent to be drawn.

When these 6 vertices reach the rendering pipeline in the hardware, there is a small program (a shader) that can run on each and every vertex. Remember the graphics hardware is built for this, so it does many of these at once in parallel so it is really fast.

That program only cares about one thing really : The vertex shader mainly cares about the position that the vertex will be (there is a footnote in the conclusion). This means that we can manipulate (or calculate) the correct position that the vertex should be. Very often this includes camera calculations and determines how and where the vertex ends up before being drawn.

Let's visualise this below, by shifting the sprite 10 units to the left :

If you wanted to, you could apply sin waves, or random noise or any number of calculations on a per vertex level to manipulate the geometry.

Practical example

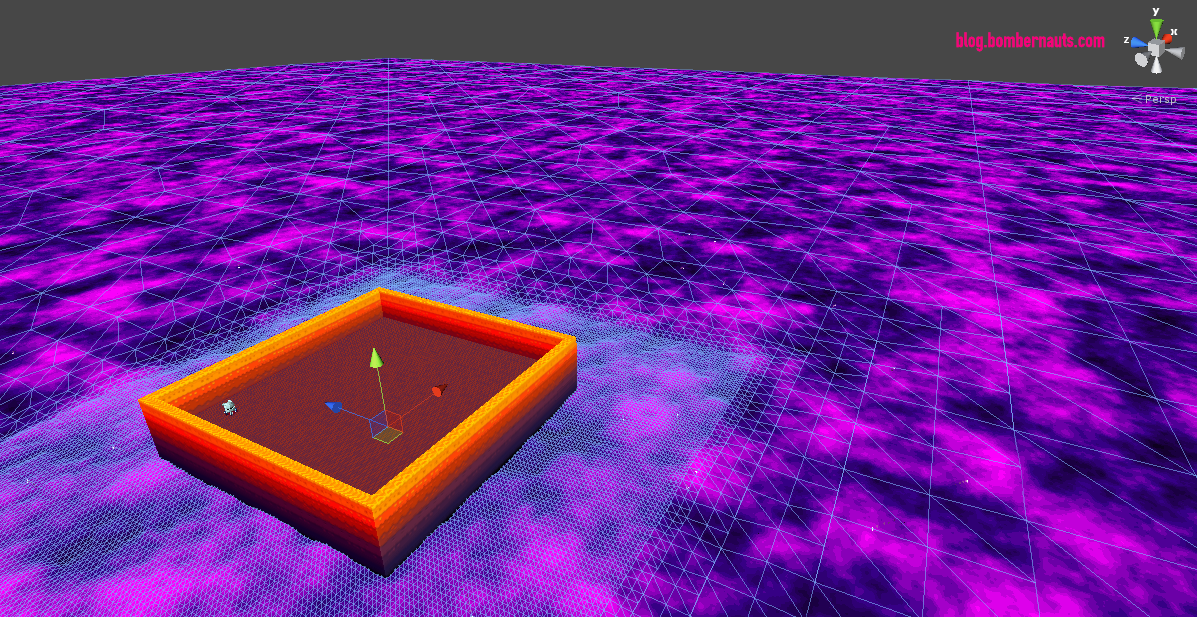

This can be used to generate waves that work to move vertices according to patterns that look like lava or water. All of the following examples were provided by Tyler Glaiel from Bombernauts

The lava (purple) area geometry, bunches of vertices!

How it looks when a vertex shader moves it around (notice how the vertices are pushed up and down and around like water, this is the vertex shader at work)

You can have a look at how it looks when it ripples on the blog post here, at the Bombernauts development blog.

The fragment shader

After the vertices are done moving about, they are sent to the next stage of the shader to be "rasterized", that means converted into fragments that end up as pixels on screen.

When doing this stage of rasterizing geometry (which are now called fragments), each fragment is given to the fragment shader. These are also sometimes referred to as pixel shaders, because some people associate the fragments with pixels on screen, but there is a difference.

Here is a gif from an excellent presentation on Acko.net which usefully demonstrates how sampling works, which is part of the rasterization process. It should help understand how the vector geometry becomes pixels in the end.

Now, the fragment shader, much like the vertex shader, is run on every single fragment. Again, it is good at doing this really quickly, but it is important to understand that a single line of code in a shader can cause drastic performance cost due to the sheer number of times the code will be run! (See the note at the end of this section for some interesting numbers).

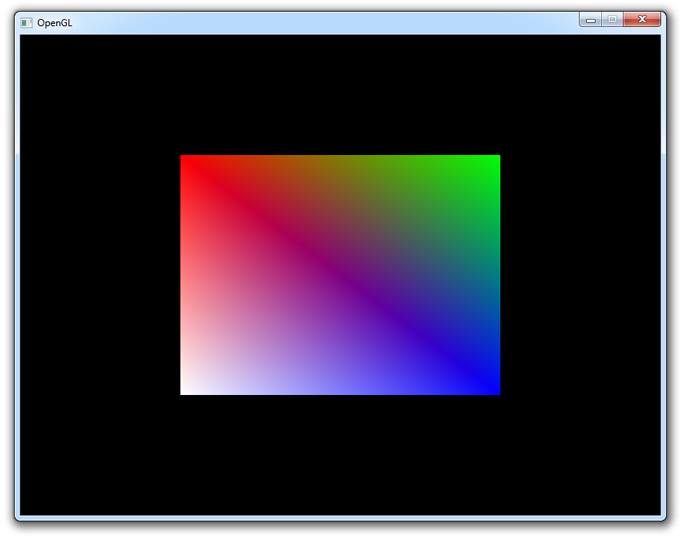

The fragment shader mainly cares about what the resulting color of the fragment becomes. It will also interpolate (blend) from each vertex, based on it's location between them. Let's visualize this below :

When I say interpolated, here is what I mean : Given a rectangle with 4 corners (arranged as 2 triangles) and the corner vertices colors set to red, green, blue and white - the result is a rectangle that is blended between the colors automatically.

Practical example

A fragment shader can be used to blur some or all of the screen before drawing it, like in this example, some blur was applied to the map screen below the UI to obtain a tilt shift effect. This is from a game I was working on for a while, and the tilt shift shader came from Martin Jonasson.

For the curious, here is the source for the tilt shift shader along with some notes about separating the x and y passes for a blur, since that has come up a bunch.

An important note on numbers

A game rendered at 1080p, a resolution of 1920x1080 pixels, would be 1920 * 1080 = 2,073,600 pixels.

That is per frame - usually games run at 30 or 60 frames per second. That means (1920 x 1080) x 60 for one second of time, that's a total of 124,416,000 pixels each second. For a single frame buffer, usually games have multiple buffers as well, for special effects and all kinds of rendering needs.

This is important because you can do a lot with fragment shaders especially because the hardware is exceptionally good at it but when you are pushing performance problems it can often come down to how quickly the hardware can process the fragments, and shaders can easily become a bottleneck if you aren't paying attention.

Playing around with shaders

Playing with shaders can be fun, here are some links to some sandbox sites where you can see examples, and create your own shaders with minimal effort directly in your browser.

https://www.shadertoy.com/

http://glsl.heroku.com/

http://www.mrdoob.com/projects/glsl_sandbox/

Conclusion

Recap : Shaders are applied in a program that consists of parts, and apply when enabled to geometry, when submitted to be drawn.

Vertex shaders : first, applied to every vertex when enabled, each render, and mainly care about the end position of the vertex.

Fragment shaders : second, applied to every fragment when enabled, each render, and mainly care about the resulting color of the fragment.

Because the nature of shaders are so versatile, there are many many things that you can do with them. From complex 3D lighting algorithms down to simple image distortion or coloring, you can do a huge range of things with the rendering pipeline.

Hopefully this post has helped you better understand shaders, and let you explore the possibilites without being completely confused by what they are and how they work going into it.

footnote

It should be said there is more that you can do with vertex shaders, like vertex colors and uv coordinates, and there is a lot more you can do with fragment shaders as well but to keep this post a primer, that is for a future post.

Notes on the term “Shaders”

The term “Shader” is often called out as a bit of a misnomer (but only sort of), so be aware of the differences. This post is really about the ”programmable pipeline”, as mentioned in bold really early on. The pipeline has stages that you can run some code for certain stages. A GPU program is made up of code from each programmable stage (vertex,fragment,etc), compiled into a single unit and then run over the entire pipeline while geometry is submitted for drawing, if that program is enabled.

Each stage does a little communicating between the stages (like the vertex stage hands the vertex color to the fragment stage), and the vertex and fragment stages are the most important to understand first.

I personally feel like the term shader comes from the fact that 99.9% of the time you will be working with the programmable pipeline will be spent on shading things, while the vertex and other stages are often a fraction of the day to day use of your average application or game.